Startup Opportunities in Machine Learning Infrastructure

From perspective of an investor & previous early-stage operator in the space

Today, machine learning (ML) has applications that once seemed impossible. Self driving cars & food service robotics are available in cities, personalized recommendations & image filters improve products we use everyday, and we fly through email responses with autocomplete. We also now have multiple instances of successful ML infrastructure companies that power these vertical applications, including Databricks, C3.ai, DataRobot, UiPath, and Scale AI. Nonetheless, when I was working at Scale AI, I saw even the most sophisticated teams have challenges scaling their ML in production. I also encountered challenges myself deploying ML models at Scale to automate parts of our products. Due to the nascency of “modern” ML (2011 was the first year a convolutional neural net - a type of deep learning model - won the most popular computer vision competition), ML infrastructure is not widely standardized and adopted. Most sophisticated ML applications are currently constrained to a small set of large tech companies with massive budgets and in-house expertise. However, many other companies without the time, knowledge, or resources also want to have ML as a tool in their toolbox. Infrastructure startup opportunities abound!

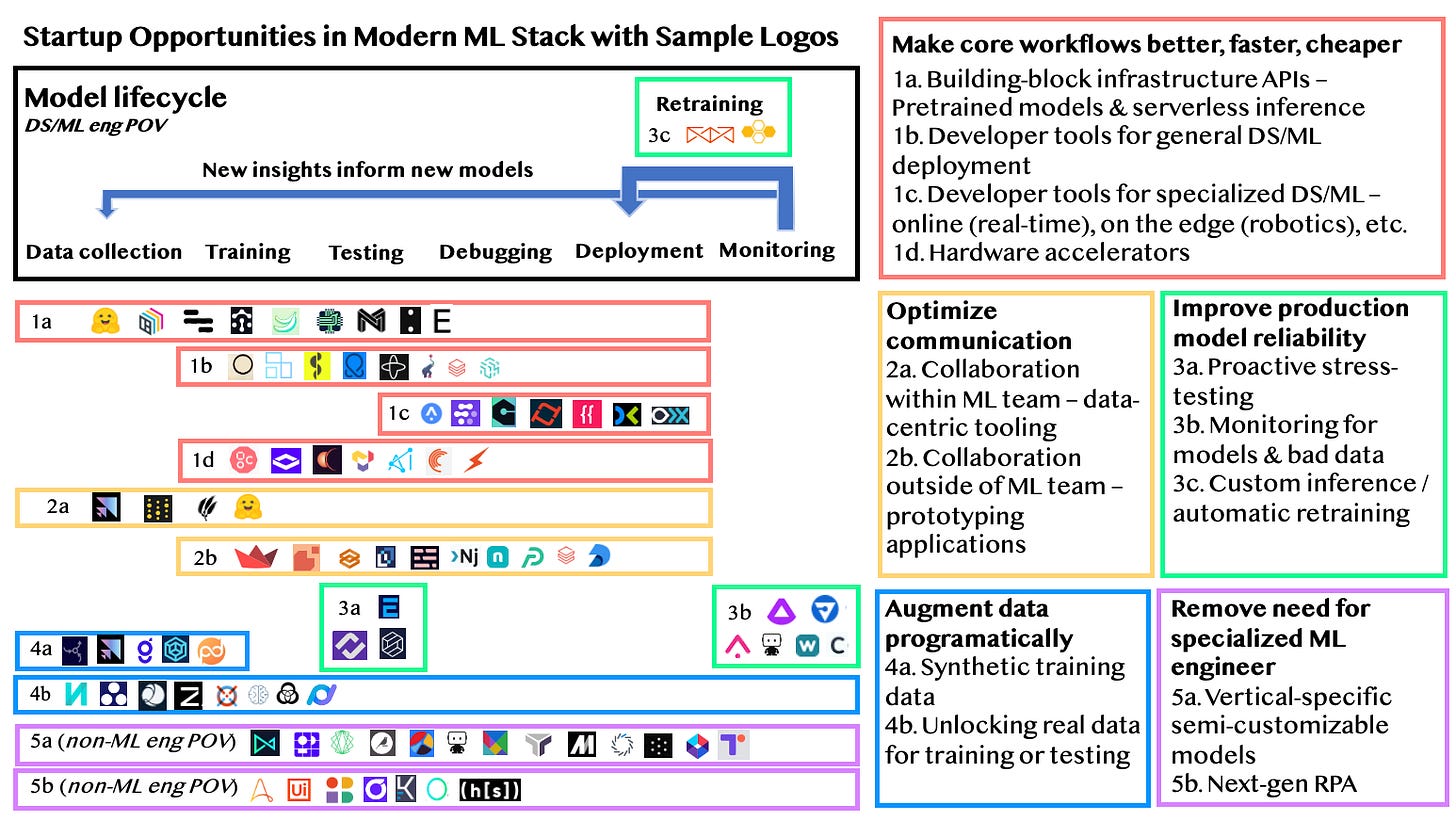

I am writing this post to more publicly broadcast some of the types of ML infrastructure companies I’m excited to see proliferate based on my time building as an ML engineer, working at Scale, and meeting companies & experts at Founders Fund. I divide the startup opportunities I see into five major categories, summarized in the graphic below with some examples of recent startups solving relevant pain points. (This is not a comprehensive market map. Lots of stealth early stage companies, larger growth stage startups [expanding from one category to others], & massive public companies are also going after these areas as well!) I’ll talk through these categories & provide more information on the startups pictured throughout the post.

The categories above are meant to deal with existing mainstream ways of doing ML (deep learning, gradient boosting, etc.) vs. more nascent methods (one-shot, etc.). If something nascent takes off, infrastructure will need to adapt. I also realize that some of the groupings overlap a bit; however, the main ideas are still helpful for me to map out the ecosystem: is a startup improving the existing standard development workflows, making production models more robust with newer techniques, unlocking more data for training or testing, aiding collaboration, or empowering non-ML engineers?

Opportunity 1: Make core workflows better, faster, cheaper

As an ML engineer, you must go through a lot of steps -- only a few of which are pure machine learning -- to put a model in production (shown in the graphic below). Many steps involve data engineering & developer operations (“devops”), which most ML engineers do not have expertise in; add even more disparate skill sets required if hardware or multiple models are involved. In addition, training a model or running a model’s predictions in production (“inference”) can be extremely costly, in terms of compute and engineering time. Lastly, fast model training as well as low latency inference are unsolved problems, making efficient model creation & real-time (“online”) predictions hard to obtain.

Idea a: Building-block infrastructure APIs

Sample startups: Hugging Face, BentoML, Cortex, Neuro, Banana, Larq, Neural Magic, Cohere, Exafunction

For ML engineers who are using standard architecture models with minimal customization required (e.g. BERT, YOLOv3), companies have emerged to offer the power of end-to-end ML via API. Now, ML engineers have multiple options to fine-tune and deploy pre-trained models without having to worry about devops challenges, including scaling compute & inference latency. Hugging Face offers a streamlined “AutoNLP” product which allows an ML engineer to upload data for fine-tuning, then automatically surfaces the best model for the job, trains that model on the provided data, and deploys the model. They also offer an “accelerated inference API'' to ship many transformers at scale (1k requests per second) with up to 100x performance boost in some cases.

Other companies have different strategies. Neuro automatically finds the fastest GPU for any job with different rates for “serverless” training & prediction (auto-scaling compute). Neural Magic eschews the mainstream GPU-centric approach and instead offers pre-trained “sparse” (faster/smaller) models as well as a way to sparsify your existing models, so that you can run model deployment on CPUs. Given that as much as 90% of the compute cost of an ML model is inference and the sizes of mainstream ML models are increasing faster than hardware can keep up, I’m excited to see an increased focus on deployment optimization.

This category of “ML in a box” for technically savvy users is particularly exciting as it promises to increase ML engineer productivity for standard use cases, saving their time and potentially lots of money (on more expensive infrastructure or even more engineers). The major challenge from an investment standpoint in this area is figuring out the go-to-market nuances -- which teams’ strategies will ensure that a customer uses their product first and doesn’t graduate out of their product if the company’s ML use cases become more integral (requiring more robust infrastructure) or sophisticated (requiring a more customized model)? Should startups in this category target startups through a self serve motion or teams at large companies through a traditional account based sales motion? What’s the right balance of standardization vs. flexibility?

Idea b: Developer tools for general model deployment

Sample startups (+ OSS): Outerbounds (Metaflow), AnyScale (Ray), Coiled (Dask), OctoML (Apache TVM), Temporal, Pachyderm, Databricks (Apache Spark), Grid AI

When more customized data science (DS) or ML engineering is required past the standard models, DS/ML engineers face a variety of challenges throughout training, testing, debugging, and deployment. From my talks with ML engineers outside of big tech companies, it’s not uncommon for ML models to take 6-12 months from time of data collection to production deployment. Startups have emerged - many based on open source software (OSS) frameworks - to give all engineers productivity benefits from automating infrastructure tasks and standardizing common workflows. For example, Outerbounds is an early-stage commercialization of the Metaflow project (spun out of Netflix), which aims to provide a full infrastructure stack for production-grade data science projects. Their focus is on data scientists being able to experiment with new ideas in production quickly even if the models are data-hungry & require integration with existing business systems. From the Metaflow docs & public roadmap, most of the current product applies to model development and feature engineering, though they seem to be headed into model operations in the future. I particularly enjoyed this visualization of how they are thinking about the ML stack…

Later stage companies include AnyScale, which offers scalability & observability for Ray (API for distributed applications with libraries for accelerating machine learning workloads), and Coiled, which is built on Dask (integrates with Python projects & natively scales them). OctoML has a larger focus on deployment in particular - ML engineers can use OctoML to automatically optimize most models’ performance for any cloud or edge hardware device while keeping accuracy constant. The common theme here is removing the need for ML engineers to be backend/infrastructure wizards to be successful. Given the traction of all of the open source projects, it will be interesting to see what types of use cases are most well suited for each commercialization.

Idea c: Developer tools for highly specialized model deployment (robotics, real-time)

Sample startups: Applied Intuition, Foxglove, Golioth, Tecton, Featureform, Decodable, Meroxa

This idea is technically a subset of the one above, but I wanted to give a bit more attention to the nuances of more complex ML use cases, such as robotics or real-time/online recommendations. The end-to-end process of deploying ML on robots is made more challenging by the vast amount of data from robotics sensors that must be trained on & monitored in production - a robot might need to act on updates from camera, LiDAR, other motion sensors, SLAM (mapping), and even internal signals like power. As a result, testing, debugging, and deployment can be difficult, and startups like Applied Intuition, Foxglove, and Golioth help address those lifecycle pain points, respectively.

Real-time ML deployment also comes with specific infrastructure challenges; online systems must have fast prediction capabilities as well as a pipeline for data stream processing. Feature stores like Tecton or Featureform are especially paramount here, as well as more general stream processing products like Apache Kafka/Flink or offerings from startups like Meroxa & Decodable. [Side note: Feature stores can be useful for reuse of features, governance, & collaboration for non-real time use cases as well.] For both robotics & specialized online infrastructures, the hair-on-fire pain is there for specific teams with critical use cases (self driving, warehouse robotics, drones, recommendation systems, consumer products), but the number of these teams is currently relatively small (however, likely with relatively large budgets). In addition, most have probably created existing internal solutions with varying degrees of efficacy & excitement to maintain/grow.

Idea d: Hardware accelerators

Sample startups: Graphcore, Next Silicon, Luminous Computing, Tenstorrent, Rain.AI, Cerebras, Tensil

Finally, hardware is also an option to make training and inference faster, better, and cheaper. Most of the companies working on unique chips have remained in semi-stealth and are pushing the boundaries of state-of-the-art research to offer solutions for high-performance “whale” use cases, where software solutions are not sufficient and customer budgets are extremely high. Lots of exciting progress has been reported, though benchmarks can be confusing to sort through. Also, there are the usual hardware & macro risks, including a recent chip shortage, outside of the normal product market fit risks of software-only companies.

Opportunity 2: Optimize communication

From my days as an engineer & PM, one of the biggest challenges building software is communication - the wrong product will get built if software engineers do not collaborate with other engineers (potentially causing redundancy or bad code architecture) or collaborate with stakeholders outside of engineering (potentially shipping something at odds with business goals). The same is true for ML engineering, and given the nuances of ML outside of software engineering, a set of tools has emerged to share ML knowledge & optimize velocity from training to deployment. I also talked about this category briefly in my last post, since many of these companies are useful for data scientists & analysts as well.

Idea a: Collaboration within ML team (data-centric experiment tooling)

Sample startups: Scale Nucleus, Weight & Biases, Aquarium, Hugging Face

ML engineers spend much of their time training and debugging models, and until recently the tooling to debug a bad training run or production results was siloed & meant for a single user. Startups in this category allow teams of ML engineers to collaborate by visualizing where models are failing, analyzing the results of experiments, and setting shared definitions & goals for new projects. Some of these tools, such as Scale’s Nucleus product, integrate with other parts of the ML stack conveniently; for example, ML engineers can jointly decide what data to send to Scale & label as a result of their models performing badly on that data. An incumbent solution here is Google Sheets with manually entered model run information!

Idea b: Collaboration outside of ML team (prototyping applications)

Sample startups: Streamlit, BaseTen, Gradio, Linea, Hex, Nextjournal, Noteable, Preset.io, Databricks, Deepnote

Another group of startups aim to tackle the challenges of data science (DS) & ML engineers collaborating outside of the DS/ML team. The dominant incumbent paradigm for this process is sharing zips of spreadsheets (e.g. for labelling) or URLs of Jupyter notebooks meant for a single player; one uncareful outside user can easily break a Jupyter notebook model given its unintuitive UX. Now, startups have emerged to support this communication in a first-class way, giving DS/ML engineers the power of devops & frontend engineering to more easily create shareable prototypes from their early models. Through fast prototyping, a feedback loop is created between stakeholders outside the ML team & the ML team. Streamlit is the most well-known company here, with a thriving open source community. Other newer startups have emerged with different focuses - for example, Hex on supporting more data scientist/analyst workflows (vs. ML), and BaseTen on complex ML deployment that scales as needed (overlapping a bit with pain points in the previous “core workflow improvements” category). The big questions from an investment perspective are around sizing the prototyping market and gauging how successful these companies will be in their pursuits of broader goals, which include general BI/metrics communication or ML deployment scaling.

Opportunity 3: Improve production model reliability

Outside of current ML engineering best practices, new workflows supported by startups’ offerings have emerged to handle challenges of making models more robust and manageable in production, especially at companies where large numbers of models are fine-tuned on a per-customer basis. Stress testing models before deployment, monitoring them in production, and automatically triggering retraining when beneficial are the major categories where I’ve seen in-house and external solutions. Ultimately, these techniques aim to keep ML accuracy high with minimum manual involvement from the ML engineering team.

Idea a: Proactive stress-testing

Sample startups: Efemarai, Kolena, Robust Intelligence

Similar to the concept of “unit testing” or “red teaming” in software or security engineering, some ML infrastructure startups are offering out-of-the-box tests or functionality to make user-specified edge case tests, ultimately providing more confidence that models are safe to deploy. There is currently no streamlined best practice around stress-testing models enough for production deployment, so these tools aim to fill that gap. A challenge common in this broader category is convincing the right people to start using these products in lieu of a more manual, bespoke workflow. From a GTM perspective, it can be challenging for individual ML engineers to have enough sway to get a tool adopted across the ML organization; however, it can also be challenging for an executive to feel the pain or have the context necessary to make a purchasing decision. In addition, given the uniqueness of companies’ workflows, ML services or consulting can be a necessary element of getting a product adopted in a larger organization.

Idea b: Monitoring for models & bad data

Sample startups: Arthur, Fiddler, Arize, DataRobot, Why Labs, Censius, Gantry

Teams utilizing ML can have hundreds or more models in production at the same time, deployed by many separate people using different data, architectures, tools, and processes. If there’s a potential error in even one of these many elements, the losses could be large. Monitoring companies aim to address that gap, similar to Datadog for observability or PagerDuty for engineering on-call. The question of when to alert a user that a model is performing badly can be subjective as well as what should be done in response to various levels of alerts. In addition, more mature ML infrastructure companies like DataRobot are moving into this area given the clear synergies between deploying a model and monitoring it in production.

Idea c: Custom inference / automatic retraining

Sample startups: Gantry, Bytewax

Certain complex machine learning challenges - like a real-time recommendations model or set of perception models that are used together for self driving - require more than the standard inference & retraining pipelines. Because setting up basic inference & retraining pipelines can already be quite challenging, this subcategory of tools is the most nascent of the three; however, for certain specialized users it is a hair-on-fire problem. Startups like Bytewax offer ways to build custom inference pipelines including A/B testing and branching, while those like Gantry aim to set up automatic retraining of models when it would be beneficial to do so (triggered by model monitoring in production or on a schedule). When should a custom inference pipeline be favored and what should be the conditions for retraining? These startups have opinions, but there is currently no industry-wide general consensus (though some use cases have widely adopted best practices).

Opportunity 4: Augment data programmatically

Most ML engineers now recognize that the accuracy of an ML model is highly dependent on the accuracy and amount of data it is trained on. Human labelling is particularly challenging, which has led to the rapid growth of companies like Scale AI. Another strategy for bootstrapping training is to generate data programmatically, either through creating almost entirely new data synthetically (especially for rare classes) or “unlocking” otherwise sensitive data with a variety of techniques.

Idea a: Synthetic training data

Sample startups: Synthesized, Scale AI, Gretel AI, SBX Robotics, Parallel Domain, Mostly AI, Hazy

To achieve near-perfect accuracy on complex machine learning use cases, the industry standard is combining data verified by humans with data generated automatically. A great example of this is from Waymo, where they utilize real world data many times over by applying transformations. Many robotic arm companies utilize a mix of simulation and real world scenarios for training and testing. Consequently, many startups have begun to offer ways of generating realistic new data from already annotated ground truth data. Some are particularly focused on verticals, like SBX Robotics or Parallel Domain, while others conveniently offer classic annotation and data management as well, like Scale AI. Gaming asset-creation focused companies like Unreal Engine or Unity also have clear advantages providing relevant tools for synthetic data generation.

Idea b: Unlocking real data for training or testing

Sample startups: Tonic AI, TripleBlind, LeapYear, Zama, Owkin, Apheris, Devron, Privacy Dynamics

Besides generating completely new data, existing real-world data is frequently unavailable due to PII sensitives or data-sharing regulations. A variety of approaches exist that can unlock such data including anonymization, differential privacy, federated learning, and homomorphic encryption. Many potential customers are outside of traditional tech - like healthcare and finance - and are completely blocked to even start doing basic analytics without some way of accessing their data safely.

Opportunity 5: Remove need for specialized ML engineer

This last category is the most different from the others, because the main user persona is not an ML engineer - instead, they are someone who might be technical or non-technical but where the efficiencies of ML are particularly helpful for their use case. Many of the companies in this category are platforms that democratize access to ML across broader organizations. However, though potentially vital to more user types, there are clear tradeoffs between how technical a user has to be and how general the set of solved use cases is.

Idea a: Vertical-specific semi-customizable models

Sample startups: Continual, Pecan, Corsali, Dataiku, Obviously AI, Datarobot, Tangram, Taktile, Mage, Noogata, Telepath

The broader tech industry has learned of many fruitful ways of using ML from the relatively few companies who are successful. Most of these companies have large teams of ML engineers employed with each use case, which is not a common luxury. Companies like Continual want to give the superpower of ML to data analysts - whether it’s being used in churn prediction, conversion rate optimization, or forecasting - through an end-to-end solution that integrates with the modern data stack. Mage and Tangram instead target product developers at early-stage startups. Other companies like Taktile are more vertical-focused; in Taktile’s case, targeting finance. Platforms that offer models for a variety of end users and use cases include Dataiku and Datarobot. And of course, the startups from Opportunity 1a as well as OpenAI's GPT-3 API could be applicable here, though they market more towards the ML engineer persona.

Idea b: Next-gen RPA

Sample startups: Automation Anywhere, UiPath, Instabase, Olive AI, Klarity, Rossum, Hyperscience

Robotic process automation (RPA) has exploded in recent years, a sort of “no code” automation tool for the enterprise. Many large use cases for RPA are replacing routine human tasks that don’t require machine learning, but as more of that type of work is automated away, it’s more clear how massive the additional amount of automation is that cutting-edge ML can enable. More mature players like UiPath and Automation Anywhere are expanding their reach, while upstarts like Instabase, Olive AI, and Klarity have more vertical-specific wedges in finance, health, and accounting. Also, the UX of setting up these types of workflows can frequently require many specialized “RPA developers” and RPA consulting firms which is a clear opportunity for incumbents and newer players to succeed providing a more intuitive and out-of-the-box onboarding experience.

In conclusion, I hope this post is helpful in creating a shared mental model of the ML infrastructure ecosystem and encourages anyone thinking of these or adjacent areas to reach out! I’m actively looking to refine my understanding of both the state of the art techniques as well as what ML, data, and full stack engineers (as well as nontechnical users) are practically doing to use machine learning to its full potential today. Though I believe the opportunities are large, the technical & go-to-market challenges are abundant for ML infrastructure startups, and I’d love to help in any way I can. In particular, outside of nerding out about tech / product, I love jamming on which market entry points are most strategic, scaling GTM & technical recruiting, enterprise contract creation / negotiation, and pricing, which I might generalize and expand on best practices in later posts.

Thanks especially to Markie, Christian, Eric, Alex, Dan, Russell, Varun, Jeff, Trae, Everett, Uma, Gordon, Bastiane, & many others for their thoughts & feedback!

Fascinating breakdown of the ML stack and tooling!